AI Risk Disclosures in the S&P 500: Reputation, Cybersecurity, and Regulation

This report analyzes how the largest US public companies disclose artificial intelligence (AI) risks in their 2023–2025 annual filings, providing insight into the issues shaping board agendas, investor expectations, and regulatory oversight in the years ahead.

Trusted Insights for What’s Ahead®

- AI has rapidly become a mainstream enterprise risk, with 72% of S&P 500 companies disclosing at least one material AI risk in 2025, up from just 12% in 2023.

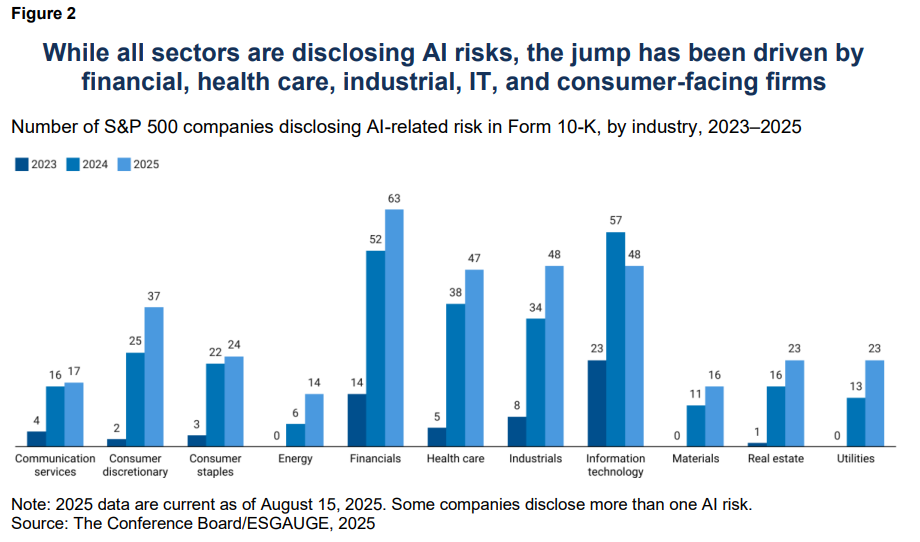

- AI risk disclosure has surged in financials, health care, industrials, IT, and consumer discretionary—frontline adopters facing regulatory scrutiny over data and fairness, operational risks from automation, and reputational exposure in consumer markets.

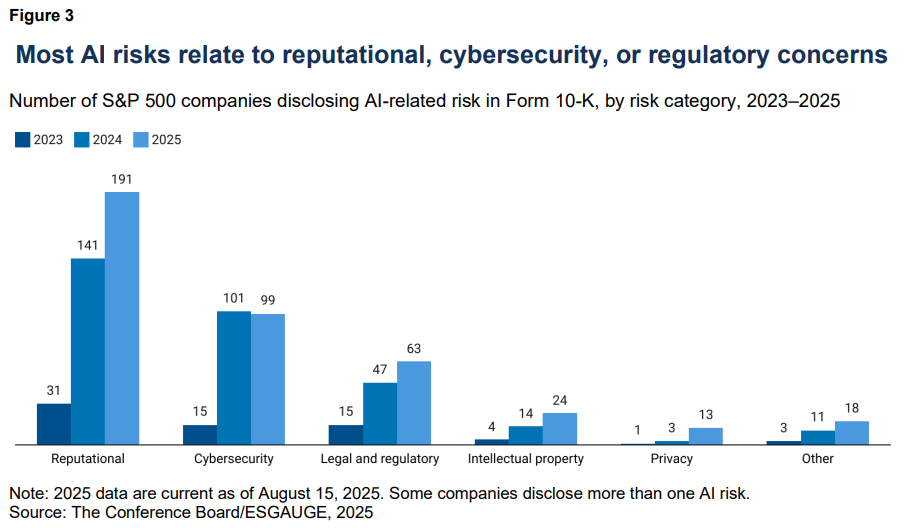

- Reputational risk is the top AI concern in the S&P 500, making strong governance and proactive oversight essential as companies warn that bias, misinformation, privacy lapses, or failed implementations can quickly erode trust and investor confidence.

- Cybersecurity is a central concern as AI expands attack surfaces and enables more sophisticated threats, influencing boards to expect AI-specific controls, testing, and vendor oversight.

- Legal and regulatory risk is a growing theme in disclosures as firms face fragmented global AI rules, rising compliance demands, and evolving litigation exposure, all of which require directors to anticipate regulatory divergence and integrate legal, operational, and reputational oversight into AI governance.

| AI risk disclosures refer to the statements US public companies include in the “Risk Factors” section of their Form 10-K, the comprehensive annual filing required by the Securities and Exchange Commission. In this section, firms outline risks that could materially affect their financial condition or future performance. Reviewing these disclosures shows how companies perceive and prioritize AI risks, where they see vulnerabilities, how practices are evolving under investor and regulatory scrutiny, and what governance expectations are emerging as AI adoption accelerates. |

AI Risk Disclosure Trends

AI is now firmly recognized as a material enterprise risk in the mandated filings of the largest US public companies (Figure 1). This reflects how quickly AI has shifted from experimental pilots to business-critical applications shaping customer engagement, operations, compliance, and brand value. It also signals that boards and executives expect heightened scrutiny from investors, regulators, and other stakeholders.

Still, over one-quarter of S&P 500 firms make no explicit reference to AI, either because their exposure is limited, potential impacts are captured under broader risk categories, or disclosure practices lag behind actual use.

The rise in AI disclosures is concentrated in the sectors most directly exposed to AI adoption: financials, health care, industrials, IT, and consumer discretionary (Figure 2). Financials and health care face regulatory and reputational risks tied to sensitive data and fairness; industrials are scaling automation and robotics; IT companies sit at the center of AI development; and consumer discretionary firms carry direct brand and reputational exposure from customer-facing applications.

When grouped into broad themes, AI risks disclosed by S&P 500 companies cluster around reputational, cybersecurity, and regulatory concerns (Figure 3). These issues are viewed as immediate and broadly material across business models, unlike sector-specific risks such as clinical safety in health care or automation hazards in industrials. Companies also highlight privacy, intellectual property, and other material risks, underscoring the wide-ranging implications of AI adoption.

The technologies and use cases referenced in filings also vary:

- Generative AI and foundation models: Large-scale language and image models have risks tied to misinformation, copyright, brand misuse, and unreliable outputs.

- Machine learning and algorithmic decisioning: Predictive tools such as credit scoring, fraud detection, and recommendation engines raise concerns about bias, opacity, and regulatory scrutiny.

- Computer vision, sensors, and internet of things: Image recognition and sensor-driven systems in vehicles, factories, or retail are susceptible to privacy issues and system failures that can create operational or safety risks.

- Autonomy and robots: Autonomous vehicles, drones, and industrial robots carry risks of accidents, liability, regulatory barriers, and workforce disruption.

- AI supply chain and infrastructure: Dependence on cloud platforms and third-party providers risks supplier concentration, cyber threats, and capacity bottlenecks.

Reputational Risk Disclosures

Reputational risk is the most frequently cited AI concern among S&P 500 companies, disclosed by 38% of firms in 2025. It serves as a catch-all for general implementation failures as well as the most visible and immediate threats from AI adoption: biased outcomes, unsafe outputs, and brand misuse. Its prominence reflects how a single AI lapse can quickly cascade into customer attrition, investor skepticism, regulatory scrutiny, and litigation—often more rapidly than with traditional operational failures, given how AI errors propagate publicly and virally.

Key reputational issues highlighted in 2025 disclosures include:

- Implementation and adoption risks (45 companies): Firms caution that reputational damage may follow if AI projects fail to meet promised outcomes, are poorly integrated, or are seen as ineffective. They identify overpromising or mismanaging adoption as a recurring driver of stakeholder skepticism.

- Consumer-facing AI (42 companies): Companies view direct use of AI in products, services, and customer interactions as a major pressure point. They consider missteps such as errors, inappropriate responses, or service breakdowns to be highly visible and damaging, particularly for consumer-oriented brands.

- Privacy and data protection (24 companies): Some disclosures flag mishandling sensitive information as a reputational hazard. They emphasize that breaches of privacy can trigger regulatory action and strong backlash, especially in technology, health, and financials.

- Business performance and competitive risk (13 companies): Some companies note that underperforming or misaligned AI investments erode competitiveness and weaken market perception of leadership’s strategy.

- Hallucinations and inaccurate outputs (11 companies): Unreliable or erroneous AI-generated content is seen as undermining credibility, diminishing trust, and potentially drawing regulatory or legal consequences.

- Bias and fairness (7 companies): A few organizations identify discriminatory or biased outcomes as particularly reputationally damaging in sectors where fairness, trust, and compliance obligations are central.

- General-unspecified (13 companies): Some disclosures cite reputational risk from AI in broad terms without detailing underlying causes, underscoring a recognition of AI as a material exposure in itself.

- Other AI reputational risks (36 companies): Additional risks cited include regulatory breaches, liability exposure, cybersecurity failures, and dependence on AI-driven models, each with potential to erode brand trust and stakeholder confidence.

Looking ahead, reputational risk disclosures are likely to move further toward control-specific measures such as watermarking and provenance tools, defined bias-testing thresholds, structured postdeployment monitoring, and independent attestations. For C-Suites and boards, the priority is converting reputational concerns into actionable governance: embedding AI within enterprise risk frameworks, setting KPIs for exposure and mitigation, and distinguishing clearly between internal and customer-facing applications. Emerging agentic AI systems, largely absent from current filings, will also likely become a major reputational risk as their autonomy and unpredictability expand.

Cybersecurity Risk Disclosures

Cybersecurity risk tied to AI was cited by 20% of S&P 500 firms in both 2024 and 2025. Unlike reputational risk, which centers on external perception, cybersecurity is treated as a core enterprise risk, combining operational disruption, regulatory liability, reputational damage, and threats to critical infrastructure. Companies note that AI both enlarges their own attack surfaces through new data flows, tools, and systems and strengthens adversaries by enabling more sophisticated, scalable attacks. These risks compound traditional cybersecurity exposures, with added urgency from stricter regulatory enforcement and heavy dependence on concentrated cloud and vendor ecosystems.

Key cybersecurity issues highlighted in 2025 include:

- General AI-amplified cyber risk (40 companies): The largest group of firms describes AI as a force multiplier, intensifying the scale, sophistication, and unpredictability of cyberattacks. They warn that AI accelerates intrusion attempts, reducing detection windows and complicating defense and containment.

- Third-party and vendor risk (18 companies): Many disclosures point to vulnerabilities created by reliance on cloud providers, SaaS platforms, and other external partners. Companies emphasize that even strong internal safeguards cannot offset exposure if critical vendors are compromised.

- Data breach and unauthorized access (17 companies): Breaches remain a core concern. Financial and technology firms in particular highlight how AI-driven attacks can expose sensitive customer and business data, with consequences including regulatory penalties, customer attrition, and reputational damage.

- Ransomware and malware (10 companies): Firms caution that AI enables attackers to automate malicious code generation, increasing both the volume and sophistication of attacks. Industrials and health care providers flag ransomware as especially disruptive, with potential to halt operations and impair essential services.

- Critical infrastructure (6 companies): Utilities, defense, and energy firms stress the danger of AI-enabled attacks extending beyond digital systems into physical operations, with implications for public safety, government oversight, and service continuity.

- Operational disruption (6 companies): A small group of firms highlights the risk of AI-related cyber incidents directly disrupting business continuity. Communications, utility, and industrial companies warn that outages threaten the reliable delivery of core services.

- Phishing and social engineering (2 companies): Generative AI can make phishing campaigns more convincing, heightening the risk that employees will be deceived and leading to stolen credentials, data theft, or system compromise.

Similar to reputational risk, cybersecurity disclosures may evolve from broad statements toward specific commitments to defense strategies, including AI red teaming, independent attestations, resilience testing, and sector-level frameworks for protecting critical infrastructure. Boards should prepare for heightened scrutiny of supply chain vulnerabilities and AI-enabled adversary scenarios. Regulators are already signaling this expectation; for example, the SEC’s 2023 cyber disclosure rules require detailed board oversight reporting, and the EU’s NIS2 Directive extends accountability for supply chain security.

Legal and Regulatory Challenges

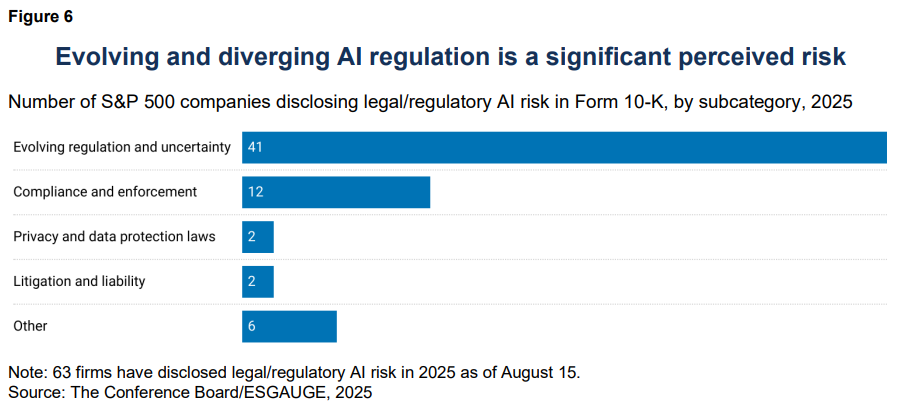

Legal and regulatory risk is a growing theme in AI disclosures. Companies emphasize both compliance with existing privacy, data protection, and consumer protection regimes and the uncertainty created by emerging AI-specific laws and cross-border frameworks (Figure 6). Unlike reputational or cybersecurity risks, which manifest quickly, legal risk is a long-tail governance challenge that can lead to protracted litigation, regulatory penalties, reputational harm, and even disruption of business models.

Key 2025 themes include:

- Evolving regulation and uncertainty (41 companies): Firms cite difficulty planning AI deployments amid fragmented and shifting rules. The EU AI Act, finalized in 2024 with phased obligations beginning in 2025–26, is frequently flagged for its strict requirements on high-risk systems, conformity assessments, and steep penalties. In the US, uncertainty over how agencies such as the Federal Trade Commission and Consumer Financial Protection Bureau will apply existing consumer protection and privacy laws adds further risk. Technology companies in particular stress that unclear regimes complicate product launches, constrain innovation, and heighten investor and public scrutiny.

- Compliance and enforcement (12 companies): Many disclosures warn that new AI-specific rules will bring heightened compliance obligations and potential enforcement actions. Firms note that meeting these requirements could raise costs, delay adoption, and expose them to sanctions if they do not meet standards. Disclosures reference EU and US federal activity, as well as some state-level activity (e.g., Colorado and Texas AI bills).

- Unclassified or cross-cutting risks (6 companies): Several filings highlight the challenge of managing legal exposure across multiple domains. For example, uncertainty remains over how courts will treat intellectual property claims related to AI training data, or who is liable when autonomous AI systems cause harm.

- Privacy and data protection laws (2 companies): Two disclosures link AI use to heightened exposure under privacy regimes such as the General Data Protection Regulation (GDPR) or Health Insurance Portability and Accountability Act (HIPAA).

- Litigation and liability (2 companies): Two company disclosures anticipate lawsuits tied to bias, misrepresentation, or intellectual property disputes. They frame these risks as financially costly and reputationally damaging, especially if class actions or high-profile cases emerge.

Legal and regulatory risks are likely to intensify as jurisdictions move at different speeds. Disclosures will become more detailed, with explicit references to AI-specific legislation, biasaudit requirements, transparency standards, and reporting mandates. Litigation will gain importance as early cases set precedents, shaping liability standards.

For boards and C-Suites, the priority will be to ensure directors can demonstrate proactive oversight to regulators and investors by tracking jurisdictional divergence, anticipating convergence in disclosure expectations, and integrating legal risk management into enterprise AI governance.

Other Emerging Risk Areas

Beyond reputational, cybersecurity, and regulatory concerns, S&P 500 companies identify other AI risks that could prove highly material as adoption scales. These risks reflect unsettled legal environments as well as strategic uncertainty around business models, customer relationships, and long-term competitiveness:

- Intellectual property (24 companies): Firms, especially in technology and consumer sectors, highlight risks spanning copyright disputes, trade-secret theft, and contested use of third-party data for model training. With case law still evolving in the US and EU, companies stress the need to monitor litigation outcomes, strengthen contractual protections, and embed intellectual property safeguards in vendor and licensing agreements.

- Privacy (13 companies): Technology, health care, and financial services firms warn of heightened exposure under the GDPR, HIPAA, and California Consumer Privacy Act/California Privacy Rights Act. Disclosures emphasize that missteps in handling personal or sensitive data could result in regulatory penalties and reputational fallout, particularly as authorities tighten rules on consent, transparency, and cross-border data transfers.

- Technology adoption (8 companies): Several firms point to risks in execution: high costs of new platforms, uncertain scalability, and the possibility of underdelivering on promised returns. They frame ineffective rollouts as diminishing competitiveness in fast-moving markets.

- Financial and market risk (6 companies): Some disclosures caution that AI could reshape competitive dynamics, with firms that fail to adopt effectively losing market share or facing revenue pressure. Broader risks include investor skepticism, valuation volatility, and potential market realignments as AI alters industry structures.

Beyond today’s disclosures, new categories of AI risk are likely to gain prominence in future filings. The environmental footprint of frontier AI models is one, given the vast energy and water demands of both training and inference. Companies, particularly in data-intensive sectors, can expect scrutiny over data center emissions, cooling-related water use, and the alignment of AI adoption with net-zero commitments. Workforce disruption and labor relations represent other emerging concerns, as AI-driven automation reshapes employment levels, skill requirements, and collective bargaining dynamics. Finally, agentic AI systems—autonomous, goal-directed models—remain largely absent from disclosures but are advancing rapidly from research labs to commercial use. Their unpredictability, diminished human oversight, and unclear liability may create some of the most significant governance and reputational challenges in the next disclosure cycle.

For C-Suites and boards, the priority is to expand governance frameworks beyond today’s narrow categories and prepare for a broader risk landscape where AI intersects with sustainability reporting, workforce stability, supply chain resilience, and societal trust.

Conclusion

AI has shifted in just two years from a niche technology issue to a core enterprise risk across the S&P 500. Disclosures reveal consistent themes around reputation, cybersecurity, regulation, and emerging pressures including intellectual property, privacy, and market disruption. For business leaders, the imperative is twofold: integrate AI into governance and risk frameworks with the same rigor applied to financial, operational, and compliance risks, and elevate disclosure practices to provide transparent, decision-useful information that builds confidence among investors, regulators, and broader stakeholders.

This article is based on corporate disclosure data from The Conference Board Benchmarking platform, powered by ESGAUGE.

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you have any complaints or copyright issues related to this article, kindly contact the author above.